Will AI ever be conscious?

Dr Tom McClelland is a Lecturer in the Faculty of Philosophy and a College Research Associate at Clare. Here, he ponders whether humans might create artificial intelligence with consciousness, and explores why this thorny question needs our attention.

An AI is a computer system that can perform the kinds of processes associated with the human mind. Many of our mental processes can already be emulated by AI. There are programs that can drive cars, recognise faces or compose music. Most of us keep a device in our pocket that can respond to speech, solve maths problems and beat us at chess. But is there anything a human mind can do that an AI never will? One possibility is that AI will process information in ever more complex ways but will never process that information consciously. After all, it’s one thing to process the colour of a traffic light but quite another to experience its redness. It’s one thing to add up a restaurant bill but quite another to be aware of your calculations. And it’s one thing to win a game of chess but quite another to feel the excitement of victory. Perhaps the human mind’s ability to generate subjective experiences is the one ability that a computer system can never emulate. Artificial intelligence might be ubiquitous but artificial consciousness is out of reach.

What should we make of this proposed limitation on the power of AI? Thinkers such as Ned Block have argued that consciousness is grounded in biology and that synthetic systems are just the wrong kind of thing to have subjective experiences. Others, such as Cambridge’s own Henry Shevlin, have argued that biological brains aren’t needed for consciousness and that we will have engineered conscious AI by the end of the century. I argue that both sides are wrong. More specifically, I think that both sides mistakenly assume that we know enough about consciousness to make any informed judgement about the prospects of conscious AI.

"Artificial intelligence might be ubiquitous but artificial consciousness is out of reach."

Human consciousness really is a mysterious thing. Cognitive neuroscience can tell us a lot about what’s going on in your mind as you read this article - how you perceive the words on the page, how you understand the meaning of the sentences and how you evaluate the ideas expressed. But what it can’t tell us is how all this comes together to constitute your current conscious experience. We’re gradually homing in on the neural correlates of consciousness – the neural patterns that occur when we process information consciously. But nothing about these neural patterns explains what makes them conscious while other neural processes occur unconsciously. And if we don’t know what makes us conscious, we don’t know whether AI might have what it takes. Perhaps what makes us conscious is the way our brain integrates information to form a rich model of the world. If that’s the case, an AI might achieve consciousness by integrating information in the same way. Or perhaps we’re conscious because of the details of our neurobiology. If that’s the case, no amount of programming will make an AI conscious. The problem is that we don’t know which (if either!) of these possibilities is true.

Once we recognise the limits of our current understanding, it looks like we should be agnostic about the possibility of artificial consciousness. We don’t know whether AI could have conscious experiences and, unless we crack the problem of consciousness, we never will. But here’s the tricky part: when we start to consider the ethical ramifications of artificial consciousness, agnosticism no longer seems like a viable option. Do AIs deserve our moral consideration? Might we have a duty to promote the well-being of computer systems and to protect them from suffering? Should robots have rights? These questions are bound up with the issue of artificial consciousness. If an AI can experience things then it plausibly ought to be on our moral radar.

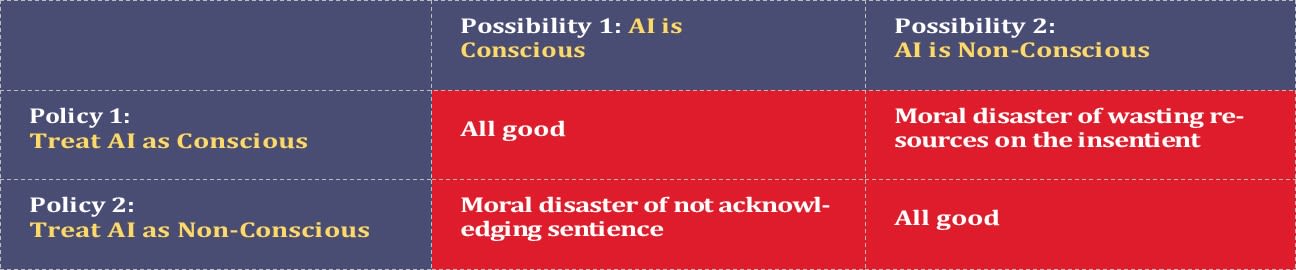

Conversely, if an AI lacks any subjective awareness then we probably ought to treat it like any other tool. But if we don’t know whether an AI is conscious, what should we do?

The first option is to act on the assumption that AIs lack consciousness. Even as they become more sophisticated and more integrated into our lives, we shouldn’t factor them into our moral decisions. Even if an AI says that it’s conscious, we should regard this as the accidental product of unconscious processes. But this gung-ho approach risks ethical disaster. We could create a new class of sentient minds and then systematically fail to recognise their sentience. The second option is to exercise caution and presume that AIs are conscious. Even if we have doubts about whether computer systems experience anything, we should act on the assumption that any sophisticated AI is sentient. But this risks a different kind of ethical disaster. We might dedicate valuable resources to the well-being of insentient automata that could have been used to help living, sentient humans. The four possibilities above capture our predicament.

Faced with this predicament, perhaps we should apply the brakes on the development of AI until we have a better understanding of

consciousness. This is what Thomas Metzinger argued when he recently proposed a global moratorium on deliberate attempts to create artificial consciousness. But how long should this moratorium last? If we have to wait until we have a complete explanation of consciousness, we could be waiting a long time, perhaps depriving the world of the benefits that more sophisticated AI might bring. And why think that AI will only be conscious if we deliberately engineer it to be so? Even with the moratorium, we risk creating conscious AI by accident. In fact, conscious AI might be with us already!

So what’s to be done? Obviously there’s important work for philosophers and cognitive scientists to do on the problem of consciousness, but we can’t rest our hopes on that being dealt with any time soon. Something we can do though is reflect more on why consciousness matters in the first place. Are we right to assume that having subjective experiences is what makes something worthy of moral consideration? Does all consciousness matter morally, or does consciousness have to take a particular form before we should start worrying about it? Or might it be that AI deserves rights regardless of whether it is conscious? Questions like these might not be easy to answer but, as AI marches forward, they become increasingly difficult to avoid.

This topic and others are explored in Tom McClelland’s new introductory book, What is Philosophy of Mind?, available here. Use the code MCC22 for a 20% discount until 30/09/22